Can't load tokenizer for 'openai/clip-vit-large-patch14' · Issue #659 · CompVis/stable-diffusion · GitHub

Can't load tokenizer for 'openai/clip-vit-large-patch14 · Issue #49 · williamyang1991/Rerender_A_Video · GitHub

Fermagli per capelli a banana antiscivolo, fermagli per artigli per capelli arenacei a grana grossa per donne e ragazze Capelli spessi e sottili - Temu Italy

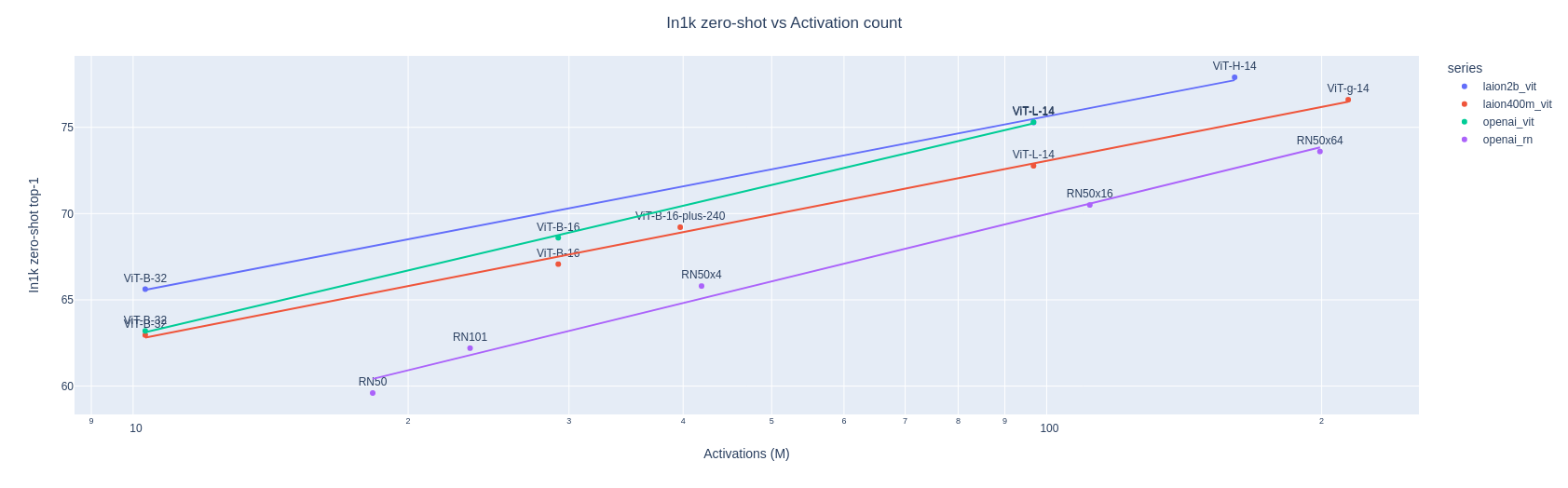

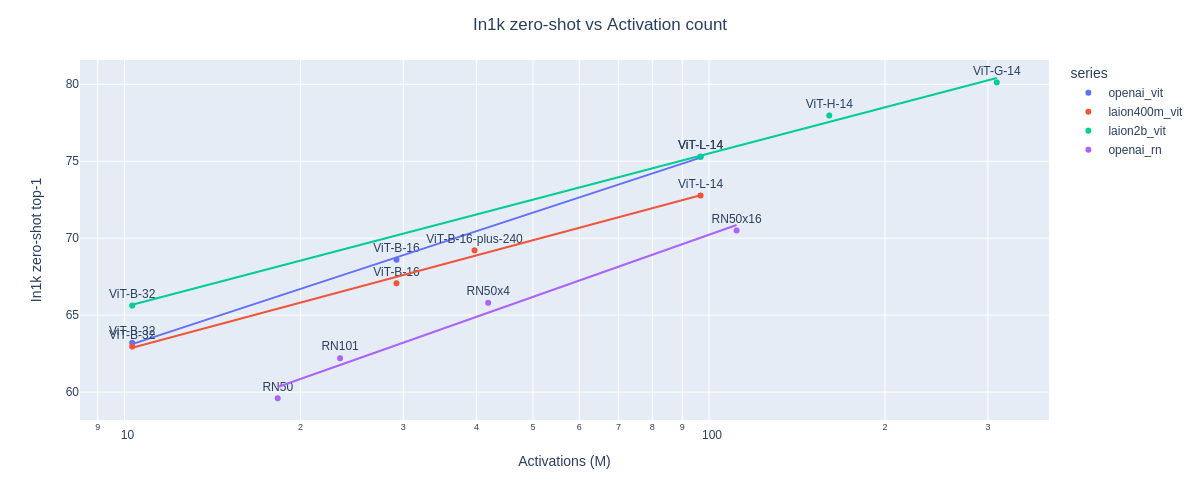

open_clip: Welcome to an open source implementation of OpenAI's CLIP (Contrastive Language-Image Pre-training).

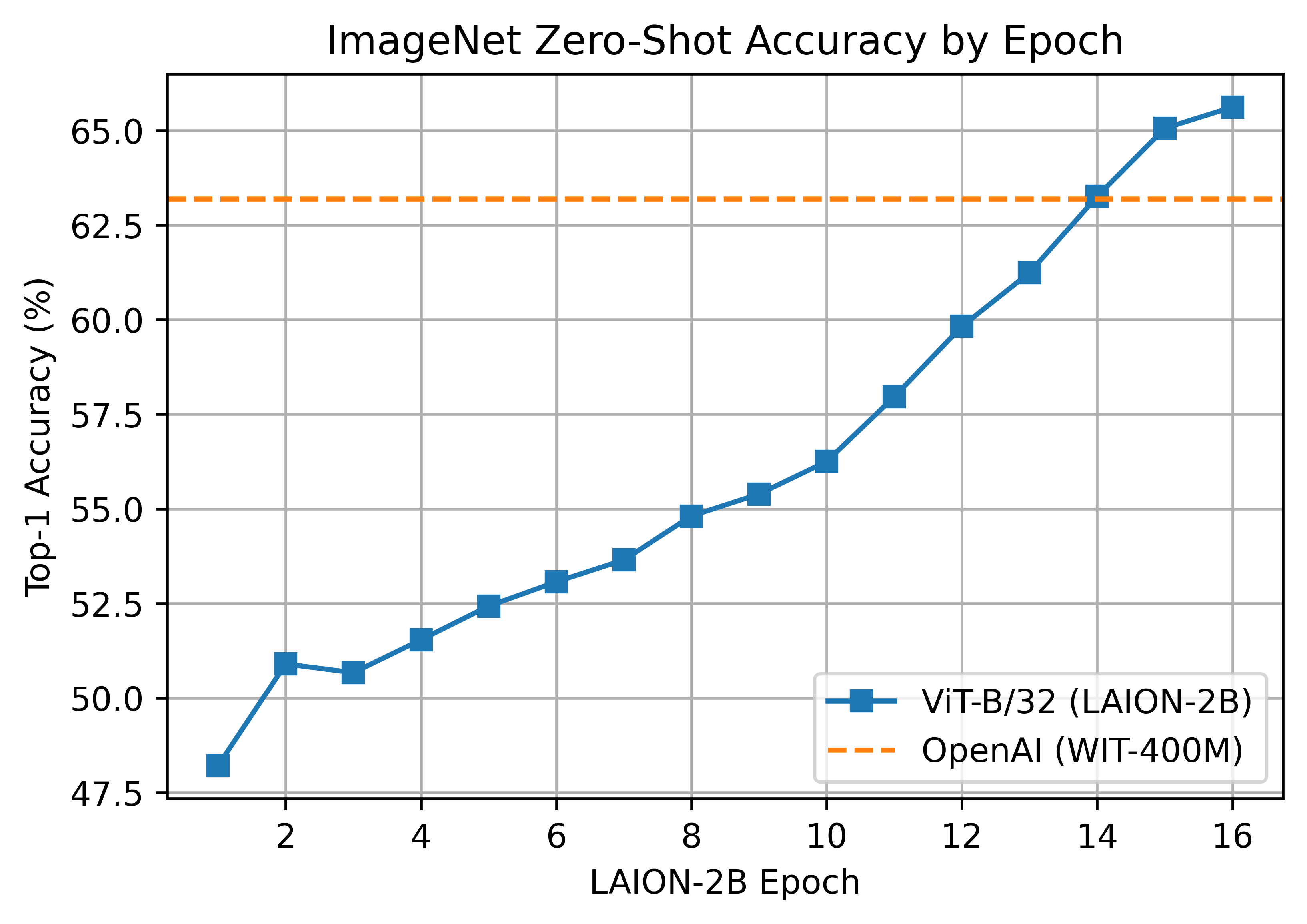

Aran Komatsuzaki on X: "+ our own CLIP ViT-B/32 model trained on LAION-400M that matches the performance of OpenaI's CLIP ViT-B/32 (as a taste of much bigger CLIP models to come). search

Review — CLIP: Learning Transferable Visual Models From Natural Language Supervision | by Sik-Ho Tsang | Medium

Romain Beaumont on X: "@AccountForAI and I trained a better multilingual encoder aligned with openai clip vit-l/14 image encoder. https://t.co/xTgpUUWG9Z 1/6 https://t.co/ag1SfCeJJj" / X

Mastering the Huggingface CLIP Model: How to Extract Embeddings and Calculate Similarity for Text and Images | Code and Life

openai/clip-vit-large-patch14 cannot be traced with torch_tensorrt.compile · Issue #367 · openai/CLIP · GitHub