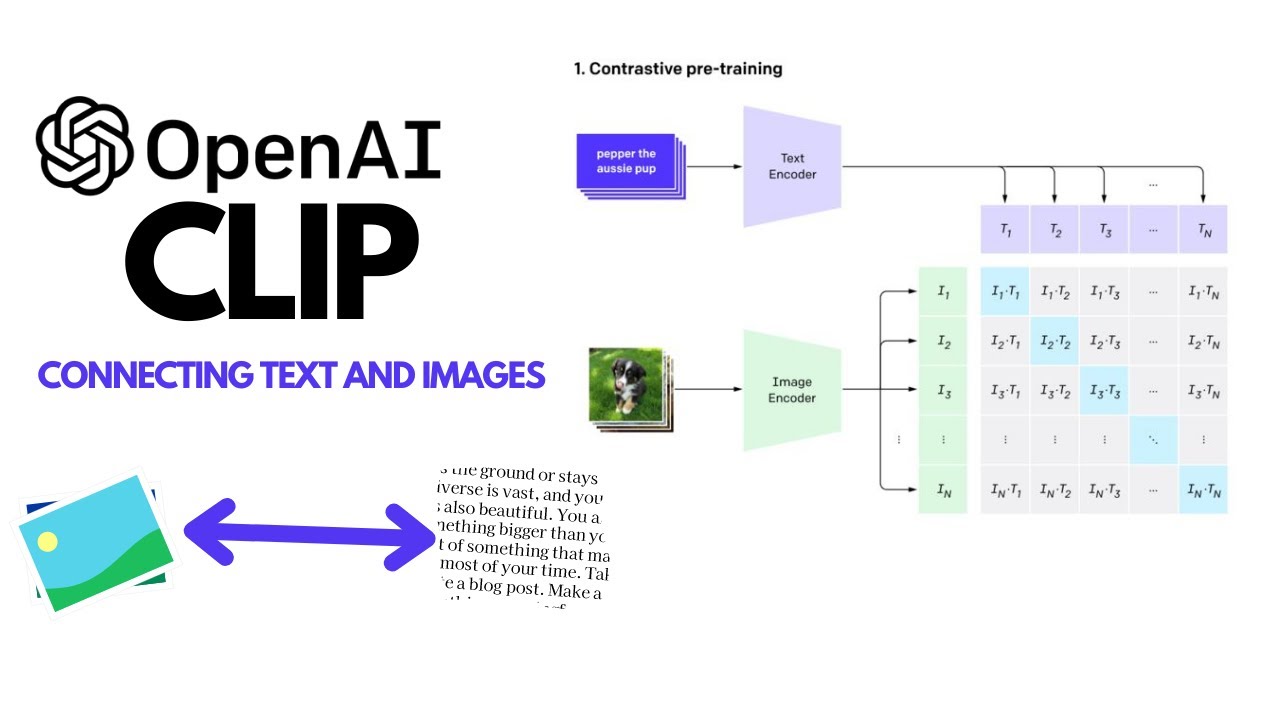

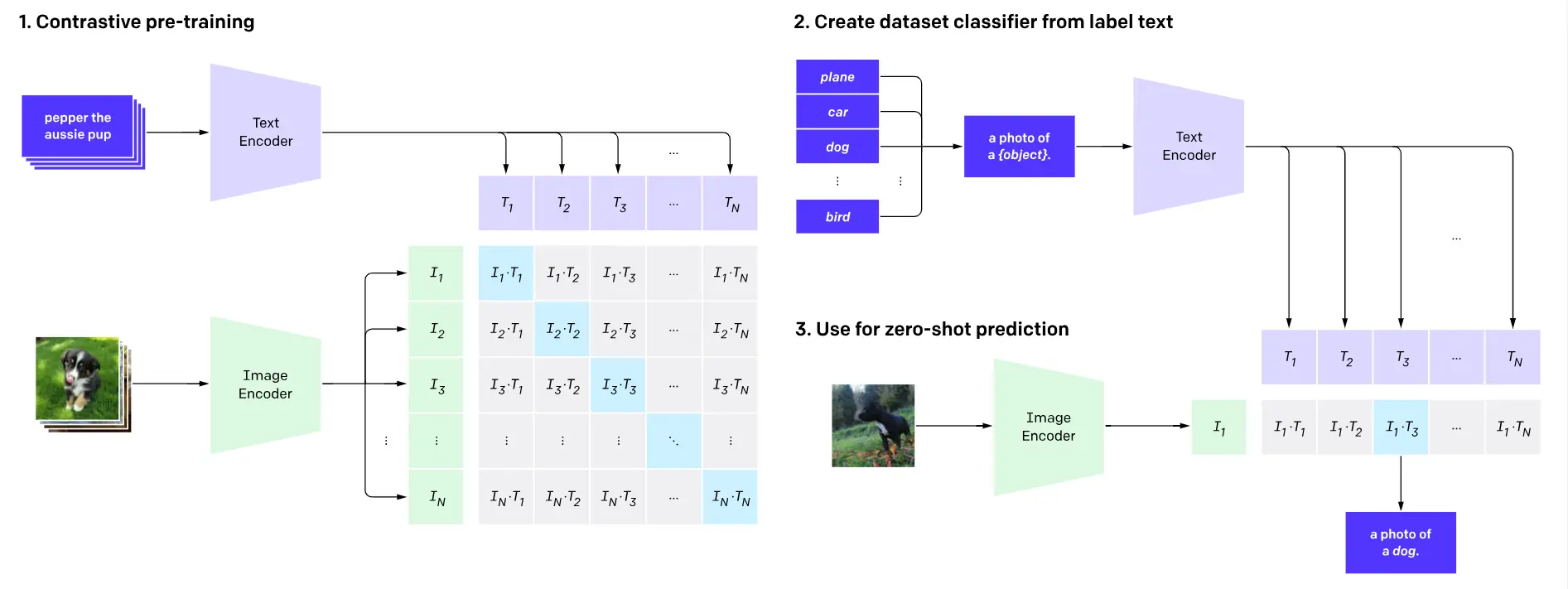

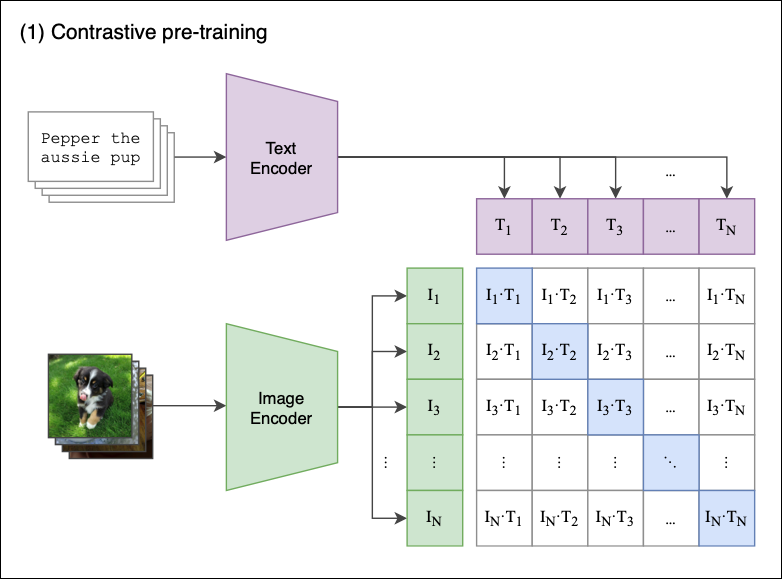

GitHub - openai/CLIP: CLIP (Contrastive Language-Image Pretraining), Predict the most relevant text snippet given an image

CLIP: The Most Influential AI Model From OpenAI — And How To Use It | by Nikos Kafritsas | Towards Data Science

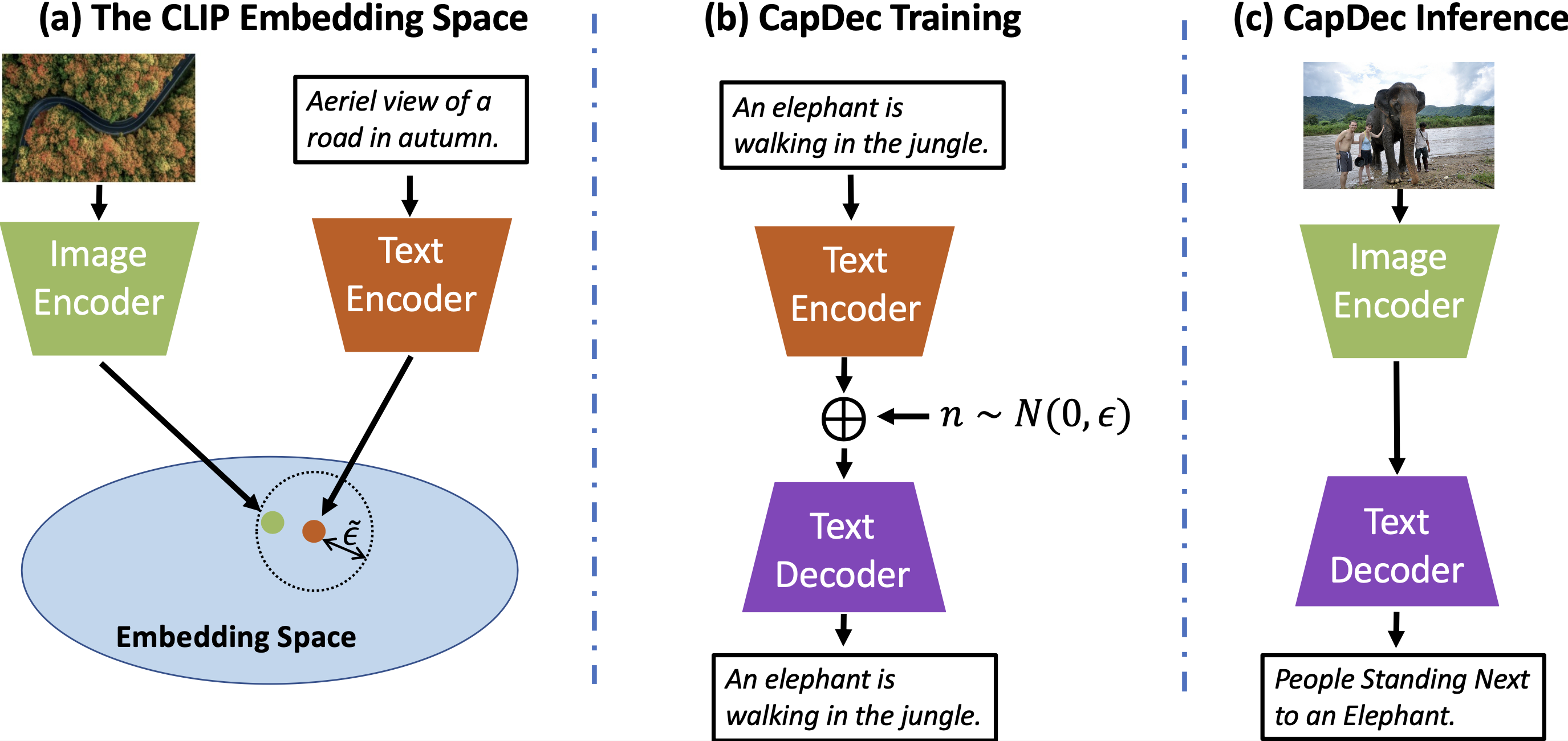

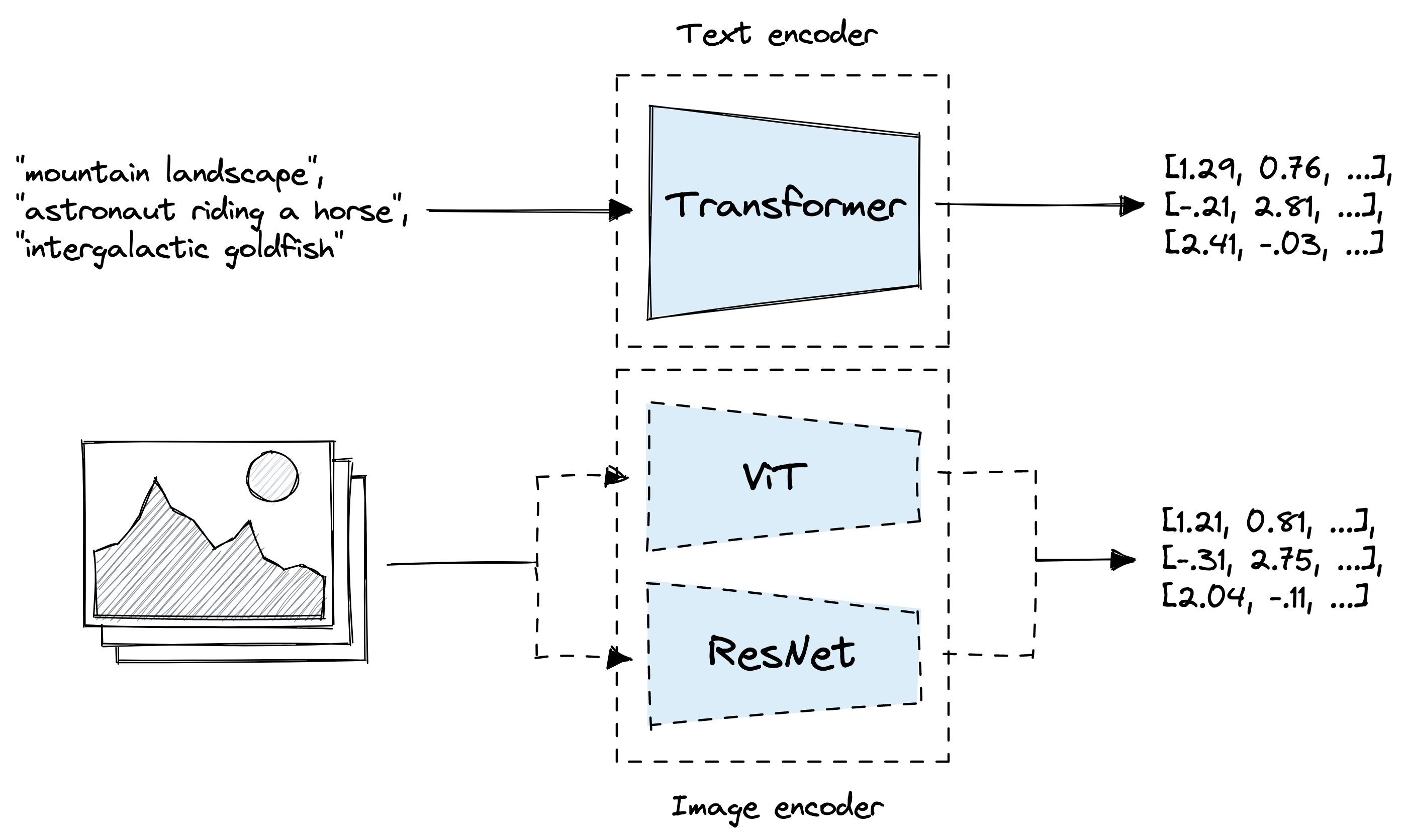

Example showing how the CLIP text encoder and image encoders are used... | Download Scientific Diagram

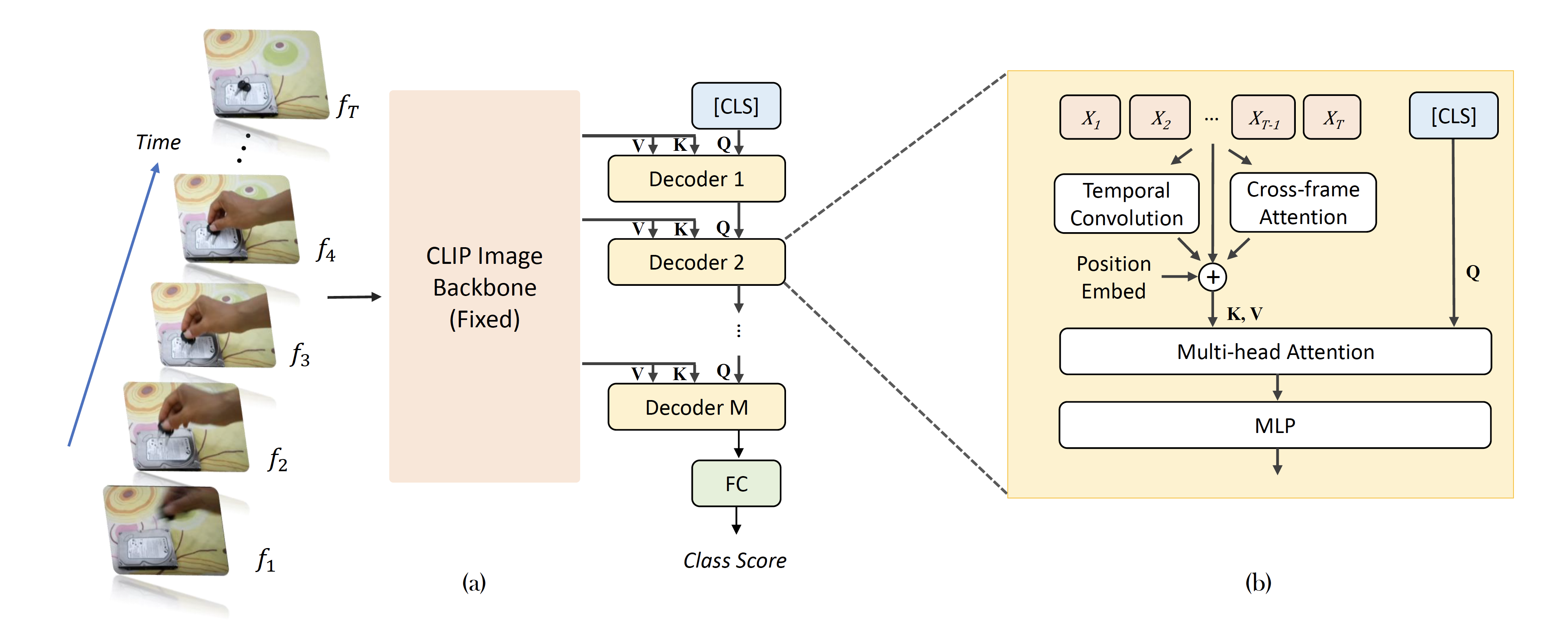

How do I decide on a text template for CoOp:CLIP? | AI-SCHOLAR | AI: (Artificial Intelligence) Articles and technical information media

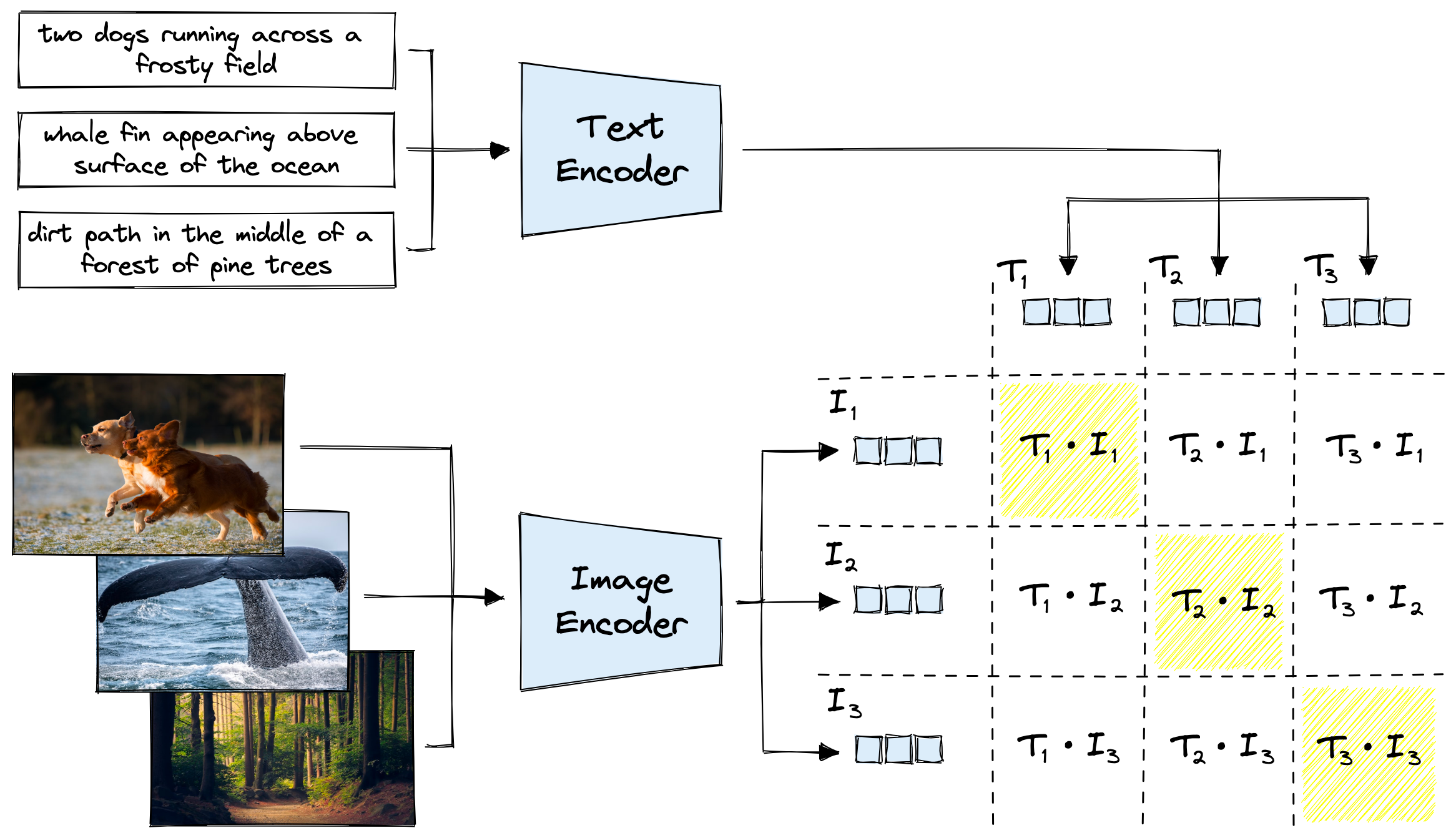

OpenAI's unCLIP Text-to-Image System Leverages Contrastive and Diffusion Models to Achieve SOTA Performance | Synced

Romain Beaumont on X: "@AccountForAI and I trained a better multilingual encoder aligned with openai clip vit-l/14 image encoder. https://t.co/xTgpUUWG9Z 1/6 https://t.co/ag1SfCeJJj" / X

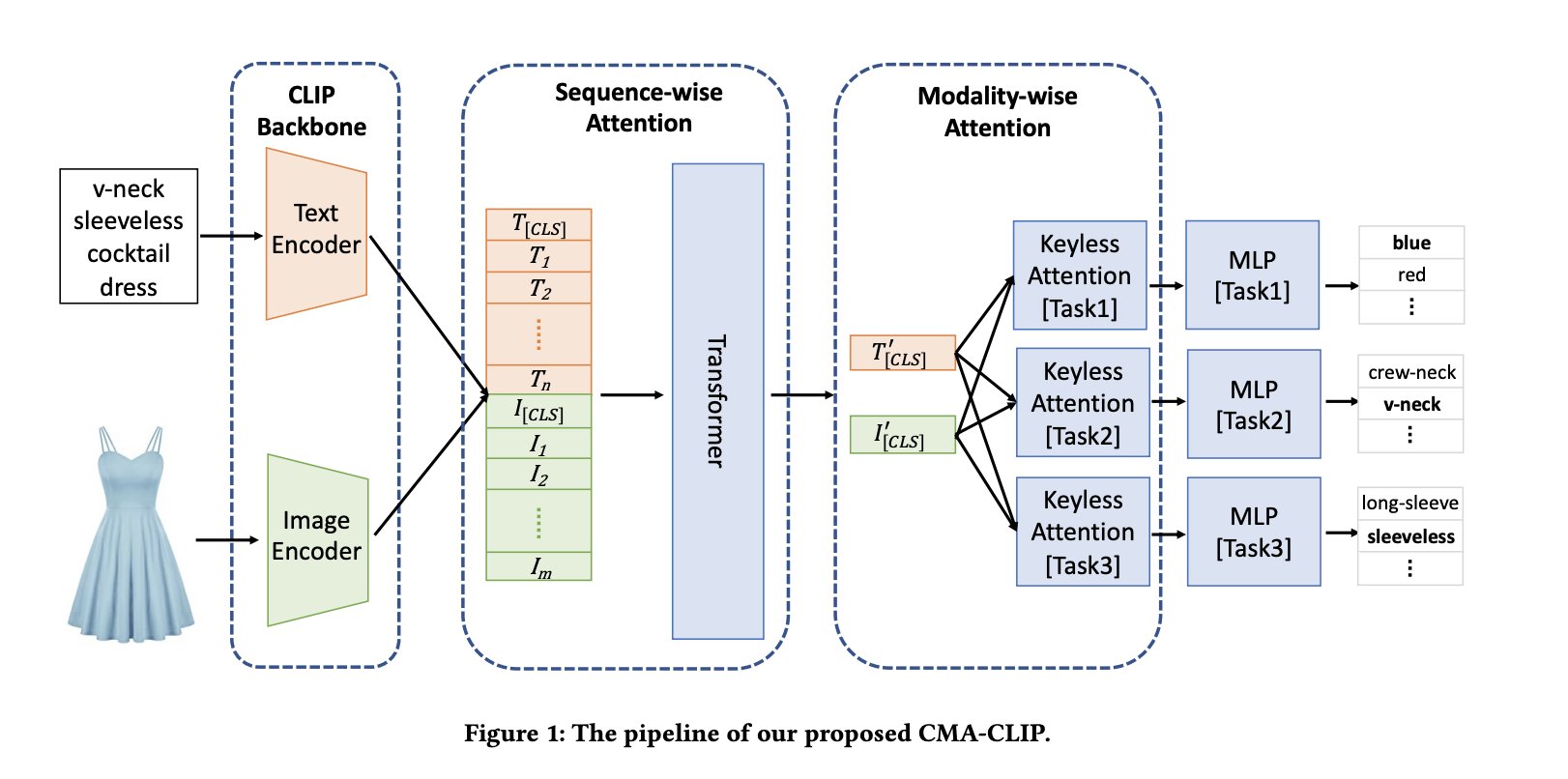

AK on X: "CMA-CLIP: Cross-Modality Attention CLIP for Image-Text Classification abs: https://t.co/YL9gQy0ZtR CMA-CLIP outperforms the pre-trained and fine-tuned CLIP by an average of 11.9% in recall at the same level of precision

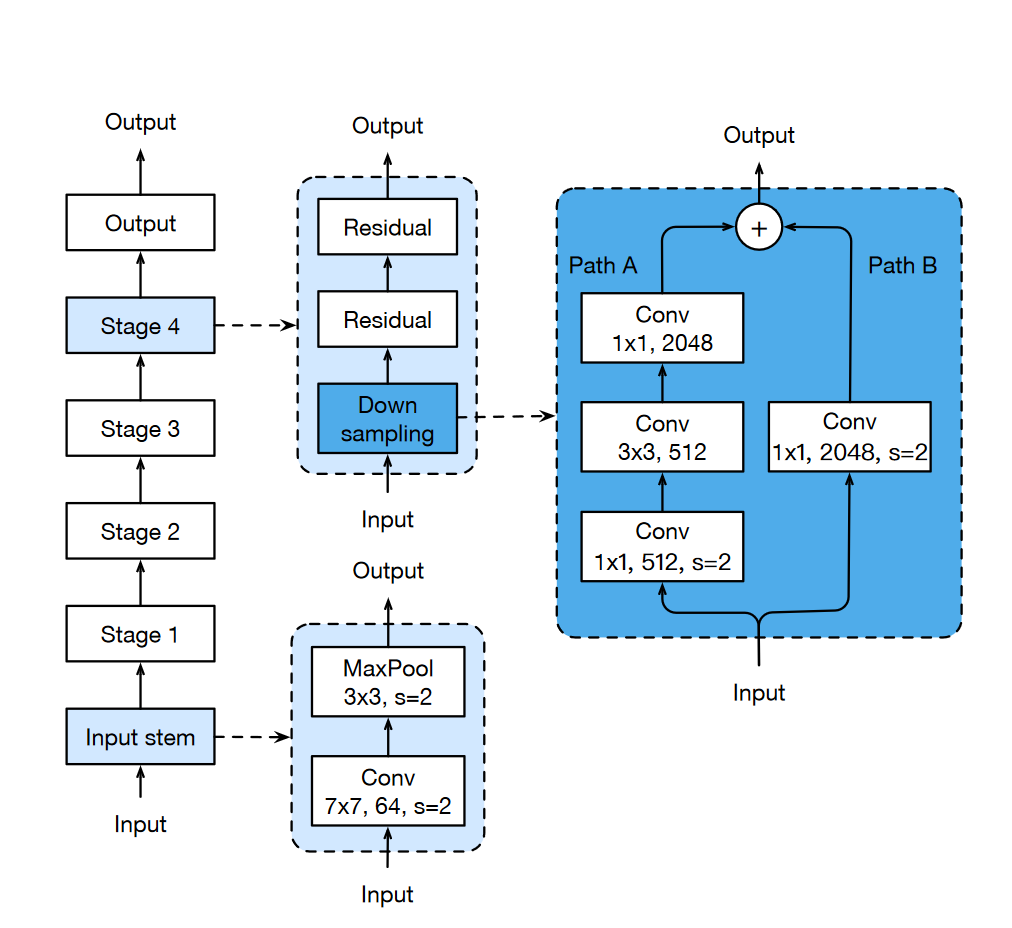

Process diagram of the CLIP model for our task. This figure is created... | Download Scientific Diagram

Example showing how the CLIP text encoder and image encoders are used... | Download Scientific Diagram