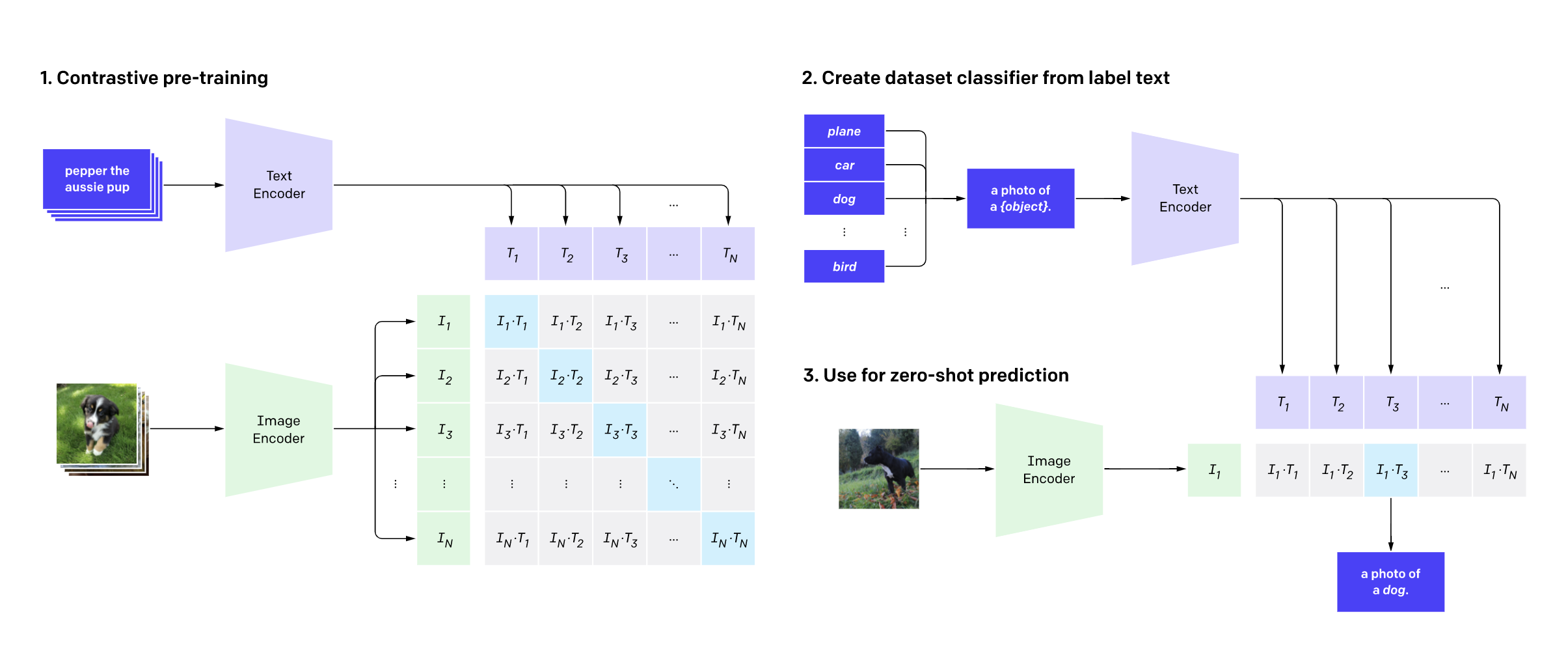

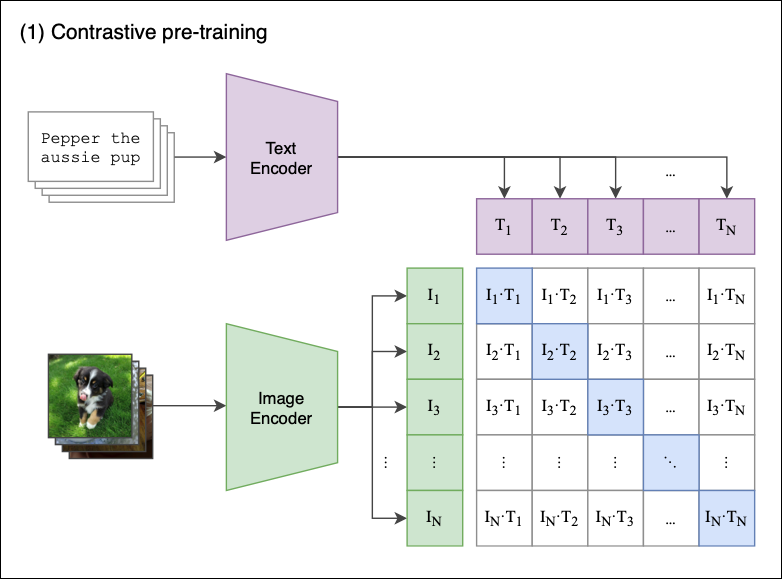

GitHub - openai/CLIP: CLIP (Contrastive Language-Image Pretraining), Predict the most relevant text snippet given an image

Niels Rogge on X: "The model simply adds bounding box and class heads to the vision encoder of CLIP, and is fine-tuned using DETR's clever matching loss. 🔥 📃 Docs: https://t.co/fm2zxNU7Jn 🖼️Gradio

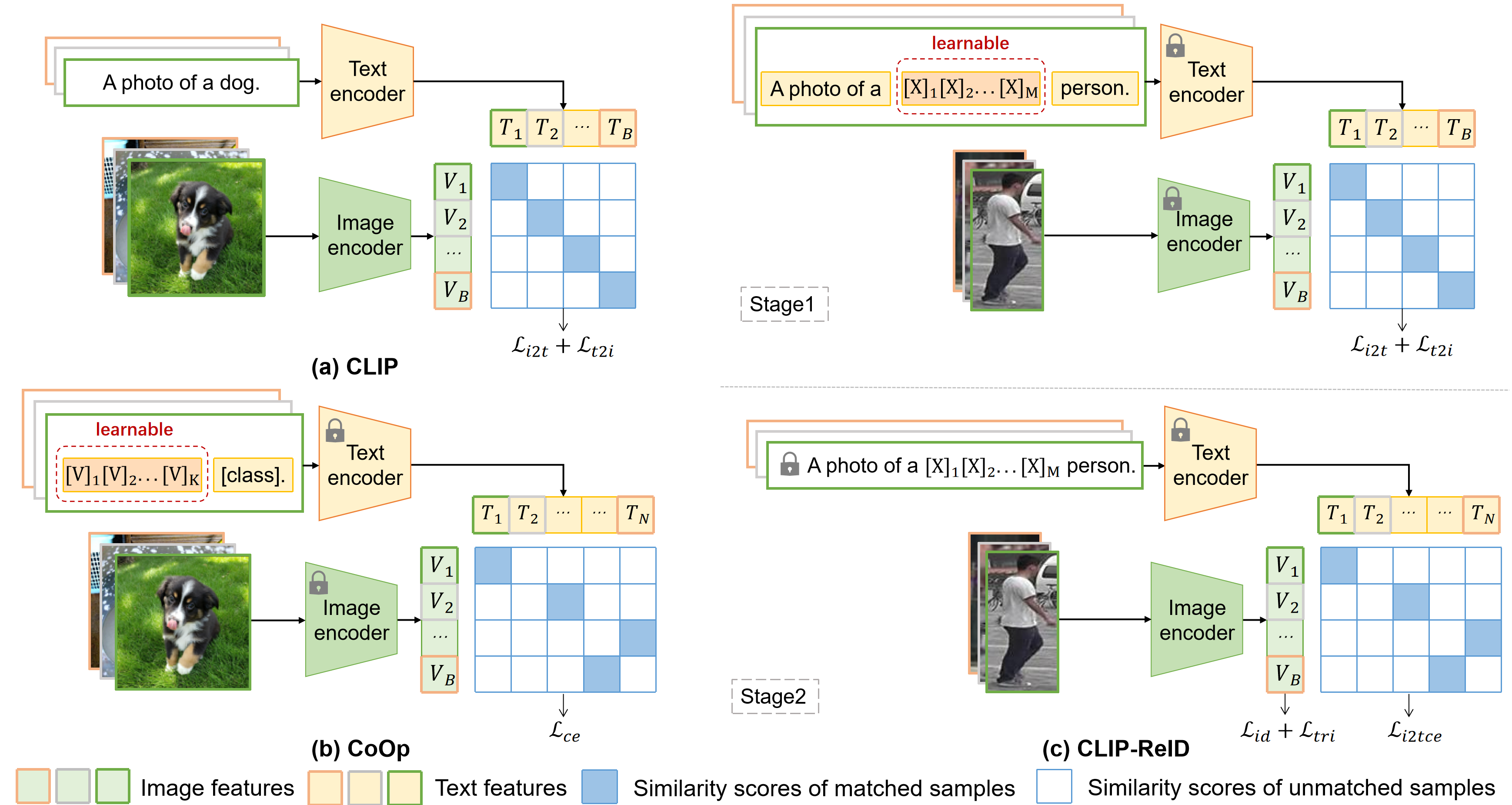

CLIP-ReID: Exploiting Vision-Language Model for Image Re-Identification without Concrete Text Labels | Papers With Code

CLIP: The Most Influential AI Model From OpenAI — And How To Use It | by Nikos Kafritsas | Towards Data Science

A Simple Way of Improving Zero-Shot CLIP Performance | by Alexey Kravets | Nov, 2023 | Towards Data Science

OpenAI's CLIP Explained and Implementation | Contrastive Learning | Self-Supervised Learning - YouTube

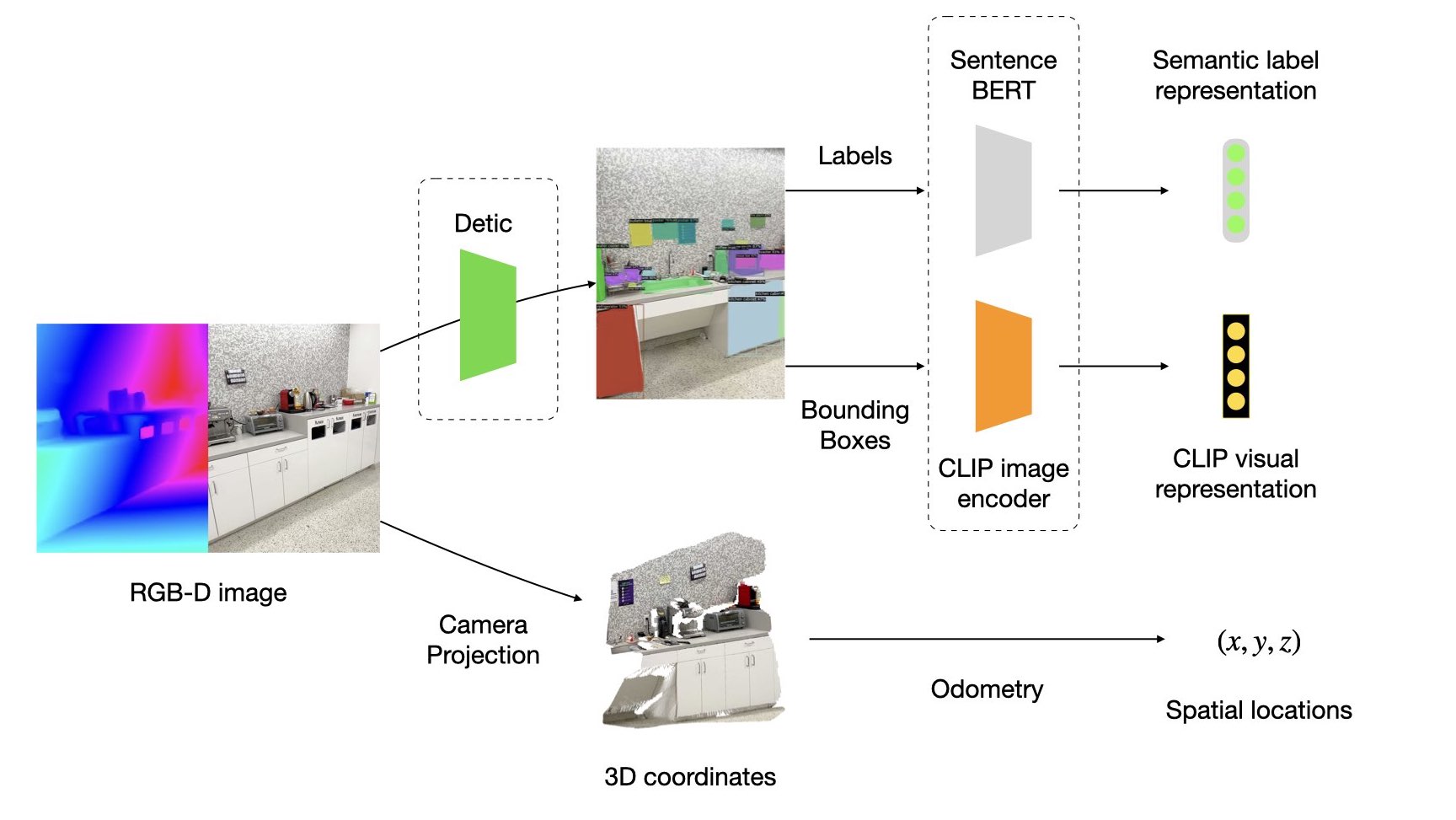

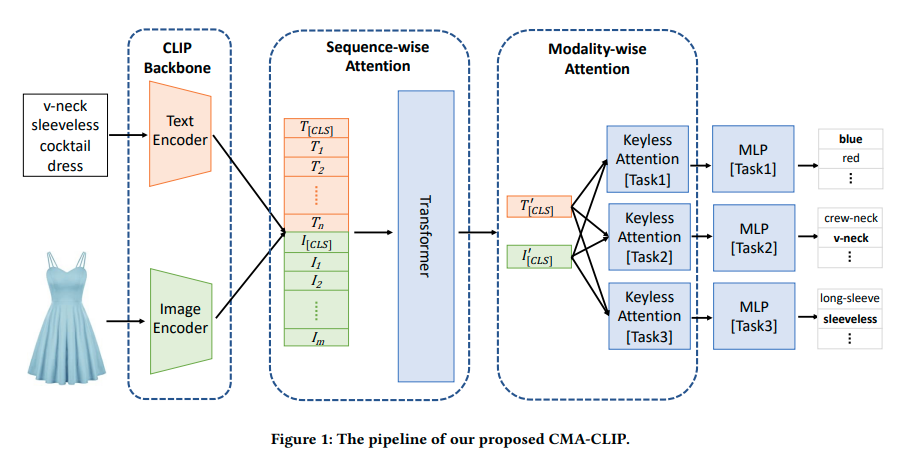

Overview of our method. The image is encoded into a feature map by the... | Download Scientific Diagram

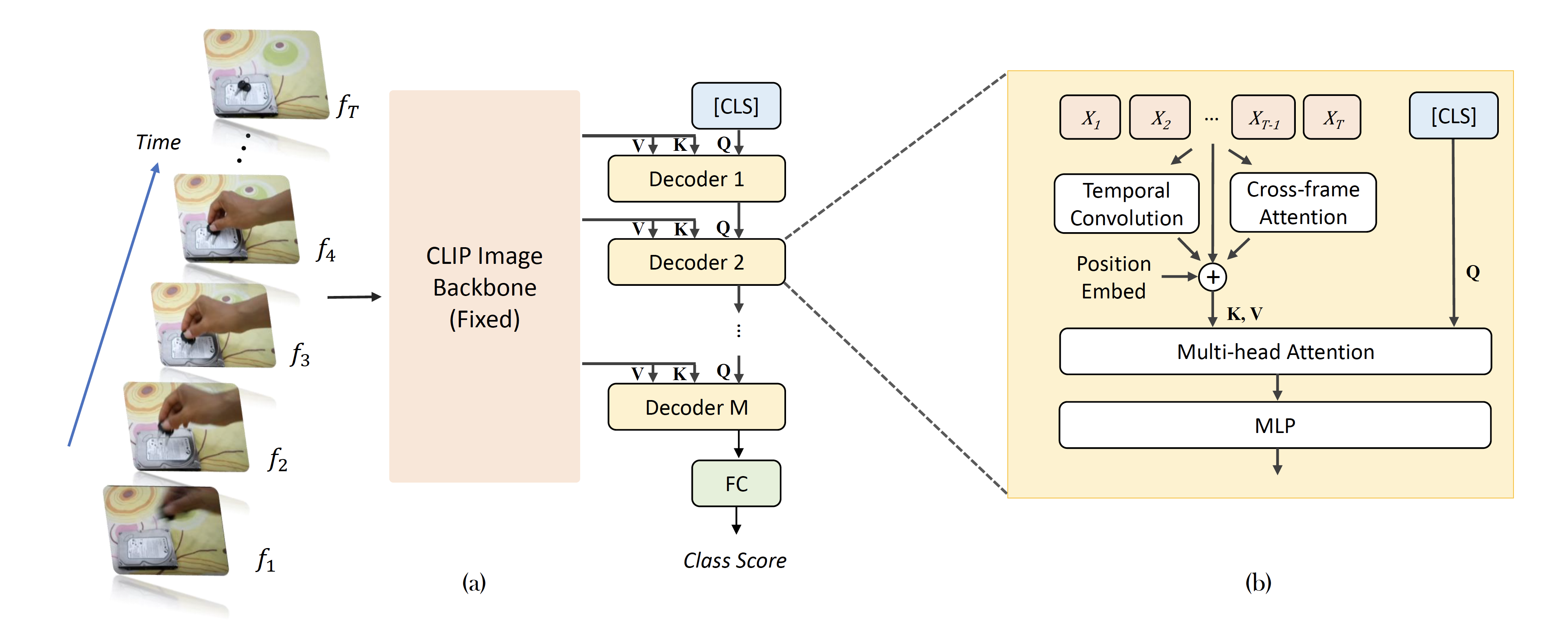

Overview of VT-CLIP where text encoder and visual encoder refers to the... | Download Scientific Diagram

![PDF] CLIP-Forge: Towards Zero-Shot Text-to-Shape Generation | Semantic Scholar PDF] CLIP-Forge: Towards Zero-Shot Text-to-Shape Generation | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/738e3e0623054da29dc57fc6aee5e6711867c4e8/3-Figure3-1.png)