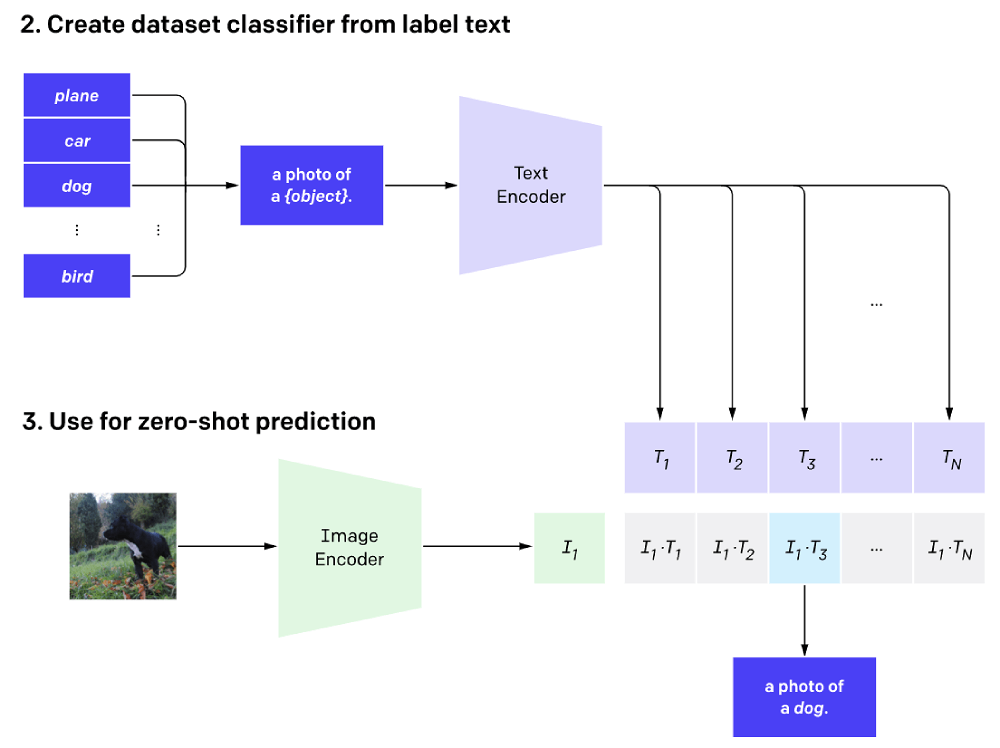

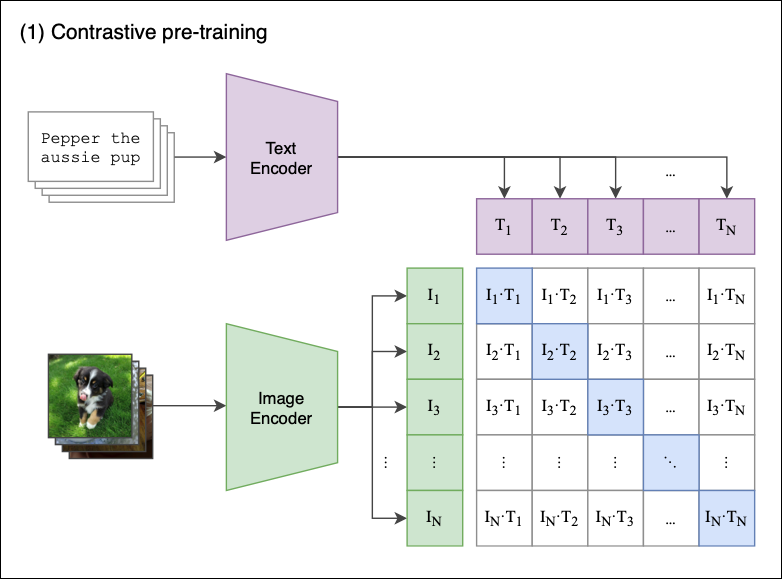

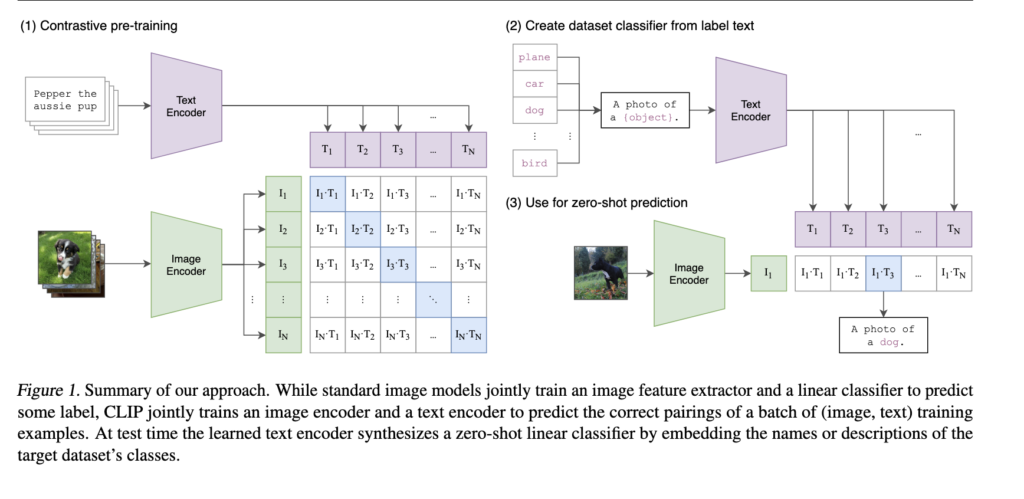

Understand CLIP (Contrastive Language-Image Pre-Training) — Visual Models from NLP | by mithil shah | Medium

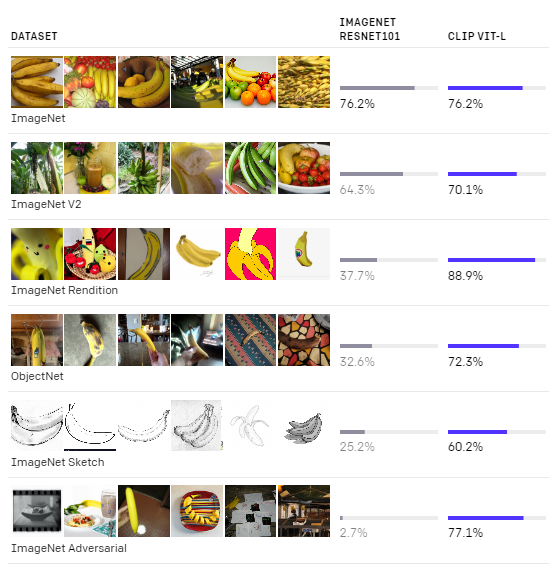

CLIP: The Most Influential AI Model From OpenAI — And How To Use It | by Nikos Kafritsas | Towards Data Science

Understand CLIP (Contrastive Language-Image Pre-Training) — Visual Models from NLP | by mithil shah | Medium

Review — CLIP: Learning Transferable Visual Models From Natural Language Supervision | by Sik-Ho Tsang | Medium

Meet 'Chinese CLIP,' An Implementation of CLIP Pretrained on Large-Scale Chinese Datasets with Contrastive Learning - MarkTechPost

Example frames of the PSOV dataset. Each row represents a video clip... | Download Scientific Diagram